- The Shift from Actors to Planners

A few weeks ago, I was asked to review a body of work for an engineer who was up for promotion. The body of work consisted of roughly a dozen individual pieces of content to review – Word docs, PDFs, diagrams, and HTML files. Each document served as evidence that the candidate had mastered the complex systems and architectures required for promotion.

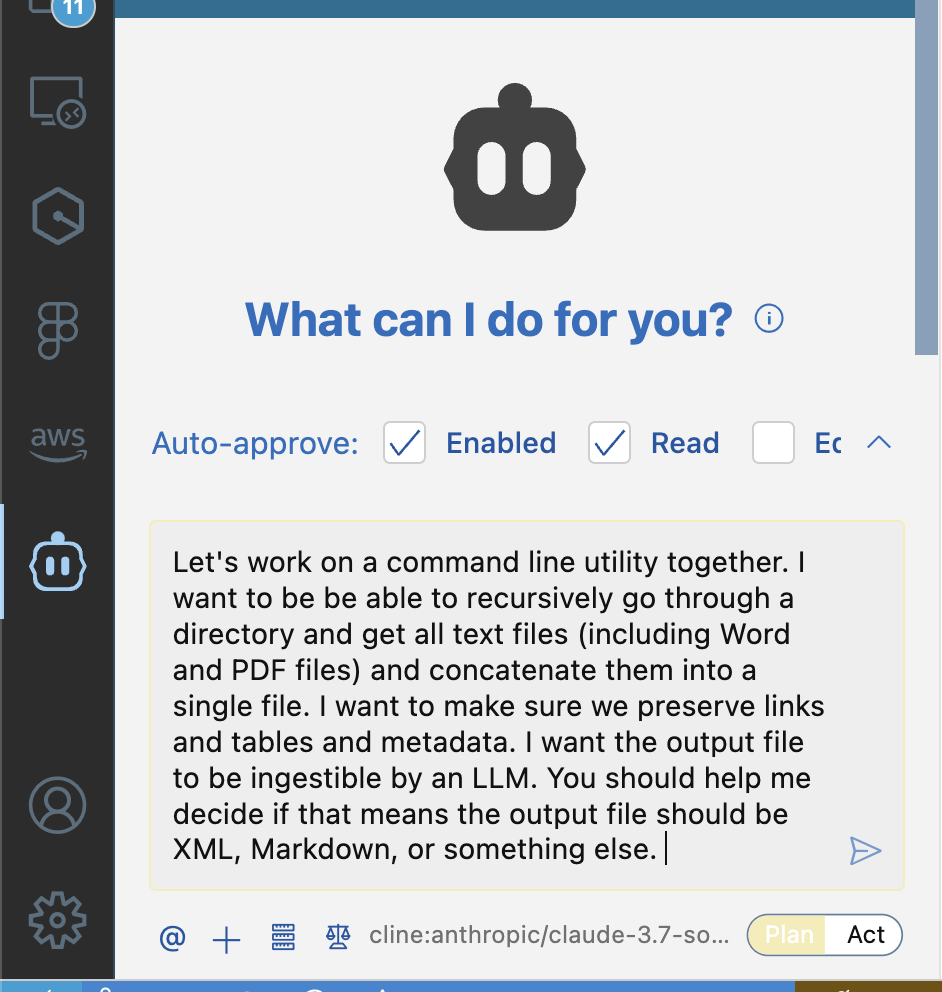

I wanted to use an LLM to help me analyze their work. After uploading a few documents to a model, I thought “I need a tool that can convert all these different file formats into a single markdown document that I can feed to an LLM.” It’s not a complex problem: convert files, join them together, and ensure the output doesn’t exceed token limits. But instead of writing the code myself, I decided to run an experiment: could I create a command line utility to recursively go through a directory, convert binary files like Word and PDF into text, and take all the other text files and conactenate them into one LLM-friendly file – without writing a single line of code myself?

The Plan/Act Paradigm

Right now (and this will change soon), many coding agents (eg Cline, Cursor) allow a coder to flip between distinct modes of interactivity. These modes sometimes have different names, but they roughly do the same things.

In “plan” mode, an agent reasons through the problem, breaks it down into steps, and creates a roadmap for implementation. In “act” mode, the agent starts executing: writing code, installing dependencies, running tests, and iterating based on results.

Cursor calls these two modes “Ask” (for planning) and “Agent” (for acting). Cline calls these modes “Plan” and “Ask”, respectively. In plan mode, your job is to have a conversation with the agent. Here was my opening request:

After some back and forth with the agent, the developer can flip the mode from plan to act. In act mode, the agent can be granted autonomy to do many of the things a developer might do to solve this problem. In this case, the agent:

- Created a virtual environment

- Used

pipto install packages like Beautiful Soup - Wrote unit tests and integration tests

- Executed tests and fixed failing code

- Added documentation and error handling

The Human in the Loop

While the agent did the heavy lifting, I wasn’t completely hands-off. I provided guidance when it got stuck, restarted sessions when the context window got too polluted, and corrected its approach when it wrote a test that was hardcoded to pass. But here’s what’s interesting: while the agent was coding, I was free to do “real work”. Attend meetings. Take calls. Review PRs. Work on a blog post.

The entire process took about two hours of “agent time” (time the agent actually spend doing planning and work), spread across a couple of days. The feeling that takes the most getting used to is that the AI isn’t impatient. It could ask me a question during its planning phase (for example which of two different PDF libraries to use), and I could come back hours later with my decision, and it would resume right where it left off. This of course is much different than working with another developer, and you have to ignore that nagging feeling that someone is waiting for you to make a decision. It doesn’t matter if you make the agent wait a minute or a month; it does not care.

The final result was a fully functional CLI tool with a test suite, security checks, and configurable parameters–all without me writing a single line of code (outside of making some README updates and removing some of the many comments it left in the code).

From Actors to Planners

This was a small, insignificant, personal project that would’ve take me a half day or so to write myself. It took a lot of back and forth conversation in the planning mode, but, the experience is already much better than tools were just six months ago in late 2024. Traditionally, engineers have been valued primarily as “actors”. Good ones can write code in multiple languages, debug complex issues, and ship reliable products that solve real problems. But as AI agents become more capable at these tasks, the role of those enginneers is shifting toward being “planners” – people who can envision solutions, clearly define requirements, and guide AI systems to build those same reliable products.

Before this experiment, I had previously estimated this transition would take a couple of years, taking over in 2027-2028. But now I believe this will happen much sooner. Within a year, I suspect many routine development tasks (equivalent to a mid-level software developer with 5 years of experience) will be handled primarily by AI agents, with humans providing high-level direction rather than implementation details.

This experiment has also led me change my belief that the human we’d need in the loop would need to have extensive “builder experience”. I don’t think that’s the case anymore. What will be required is the ability to plan effectively, define problems clearly, and evaluate that the solution provided by the agent gets the job done.. I don’t think we’ll need computer science degrees to do any of that. Much like you don’t need any formal training in auto mechanics to describe a car and what the goal of the car should be. The ability to work with agents to build things will become more valuable than the ability to implement and build those things yourself.

This shift means we need to think hard about how we train engineers, structure teams, and build software. If the majority of implementation work can be handled by AI agents, then our educational systems and professional development need to emphasize different skills:

At a minimum, we need to have proficiency in:

- Problem formulation: Clearly defining problems in ways that can be solved

- Requirement specification: Being able to articulate clear requirements that are testable

- Evaluation and verification: Determining whether a solution actually solves the intended problem

To excel and stand out amongst peers, we’ll need to have:

- Systems thinking: Understanding how components interact in complex systems

- Architectural vision: The ability to see beyond immediate solutions to design flexible, scalable systems that anticipate future needs

The tools being built today are not just getting better at writing code, they’re getting better at understanding intent, reasoning about trade-offs, and learning from feedback. They can even be provided rules and preferences for coding language, style, and patterns so that the work they create takes on the personality of the planner.

I’ll close this post by admitting something I don’t want to admit. For years, I’ve told any student who would listen: “Get a degree in Computer Science”. I’ve stopped telling them that now. I don’t really know what to tell them. Recently I’ve told them to get a job doing something hands-on. Mechanical engineering. Welding. Construction. Figure out what it will take to service and repair humanoid robots. How to work on rockets. Or go the route my daughter has: get away from technology completely and work two jobs; part time at the zoo and part time at the botanical garden.

Coding as most of us knew it is changing into something we’re not quite sure how to prepare people for yet. What I do know is this: if you want to excel in the field we used to call “software engineering”, get really good at planning.

- @jbnunn